A human being should be able to change a diaper, plan an invasion, butcher a hog, conn a ship, design a building, write a sonnet, balance accounts, build a wall, set a bone, comfort the dying, take orders, give orders, cooperate, act alone, solve equations, analyze a new problem, pitch manure, program a computer, cook a tasty meal, fight efficiently, die gallantly. Specialization is for insects.

-Robert A. Heinlein

Monday, October 29, 2012

Live Recording from Y2KX+2

Friday, October 26, 2012

Y2KX+2 Livelooping Festival

Last week I had the privilege to perform at the 12th annual Y2K Livelooping Festival in California. This festival is, by nature and design, as eclectic and wonderful as organizer Rick Walker. At times, it seemed performers shared nothing but an attentive audience and an interest in using the techno-musical wizardry of livelooping.

Among the 50+ excellent artists I had a chance to hear, there are a few that stood out for me:

- Luca Formentini (Italy) played a wonderful set of ambient guitar in San Jose and I really connected with his approach to improvised music.

- Emmanuel Reveneau (France) had an amazing set in Santa Cruz. For this second of two sets at the festival, I felt that Emmanuel had soaked up a lot whatever was in the Santa Cruz air that week and he let it influence his music, His loop slicing was especially inspired... I can't wait for the release of the software he made with his computer-savvy partner.

- Hideki Nakanishi a.k.a Mandoman (Japan) gets an unbelievable sound out of a mandolin he built himself.

- John Connell only used an iPhone and a DL4 for his set. This minimalist approach really worked for him and it reminded me that the simple option is often the best option. I hope he'll check out the soon to be released Audiobus app as it will open up some possibilities for his music.

- Amy X Neuburg is one of my favourite loopers. I have an insatiable appetite for her unique combination of musicality and humour. Unfortunately I was setting up during her set and I couldn't give her music my full attention.

- Moe! Staiano played a great set for percussion instruments such as the electric guitar.

- Bill Walker played a laid back and masterful set of lap steel looping.

- Laurie Amat's birthday set (with Rick Walker) was simply the most appropriate way to end the festival.

- Shannon Hayden: Remember that name (you'll be hearing her music in your favourite TV shows soon enough).

The collegiality among the performers was a high point of my participation in this festival. I had the occasion to enjoy discussing the philosophical aspect of improvised experimental music with Luca, sharing notes on the business side of music with Shannon, listening to Laurie tells us about her collaboration with Max Mathews, witnessing technical demonstrations from Emmanuel, Bill and Rick, and listening to my housemate Paul Haslem practice on hammered dulcimer.

The Test of Performance

Personally, my participation in the festival was an opportunity to put my meta-trombone project to the test of performance. As with any new performance system, there were both positive and negative points to these first two maiden voyages. Encouragingly, I was quite satisfied with the varied timbres I could produce with the meta-trombone. I also enjoyed the drone-like feel of some of the loops and I liked the hypnotic phasing I employed.

However, not everything went well. My software crashed midway through my performance in Santa Cruz and I was forced to restart it. Thankfully, this is something I had practiced and I was able to keep playing acoustically on the trombone while the software came back online. It did not take very long and many people told me they did not even notice the crash…

More problematic, as I listen to the recorded performances, I feel there is something missing. I find the conceptual aspects of the meta-trombone quite stimulating, however conceptually interesting music does not necessarily translate to good music (music people want to hear). I tend to get overly interested in the conceptual part, but I need to focus on the music now that the concepts are firmly in place.

I talked it over with other performers: Emanuel suggested I form a trio with a bassist and a drummer so that I could rely on them to anchor the narrative aspects; Luca thought I needed to think more about my transitions. Both suggestions will need to be explored as I continue work on the meta-trombone.

Next Steps

I'm currently editing the recordings of my two performances into accessible short 'songs' for easy consumption. While the meta-trombone still requires work, I feel that this point in its development is still worthy of documentation and I stand by the recordings I made in California.

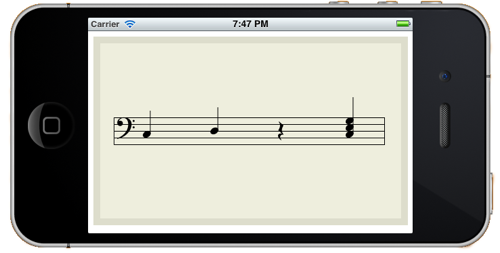

One of the first things I want to develop further is role of the notation metaphor in the meta-trombone. Currently, trombone performance is interpreted by the computer software and the notes that I play execute code (specifically Mobius scripts). I would like to expand this by creating algorithms that will send notation to be displayed on my iPod touch based on what notes were previously played. Since meta-trombone notes serve both as musical material and as control signals, the software will be able to suggest changes to either the music or the system states by displaying music notation. I already have a working app that displays music notation on iOS in real-time through OSC and it is generating quite a bit of buzz. I'll have to integrate it into a performance software for iOS that will ultimately replace TouschOSC, which I currently use as my heads-up display (see photo above).

Another avenue for further exploration would be to diversify the computer code that can be executed by playing notes. I have a couple ideas for this and I think I will turn to Common Music to help implement them. Out of the box, Common Music can respond to a MIDI note-on message by executing a scheme procedure, so it will be easy to integrate into my existing system.

I'm also looking to perform more with the meta-trombone and I'm actively looking for playing opportunities. There's a possible gig in New York City in mid April (2013), so if anyone can help me find anything else around that time in NYC, it would make it a worthwhile trip.

Friday, July 27, 2012

Meta-Trombone Revisited

The recent release of version 2.0 of Mobius has spurred me to redesign my meta-trombone Bidule patch. Since I can have both the new and the old version in the same patch, my matrix mixer (and some of the most complex patching) can be eliminated by using both versions of the looper.

The first looper will be the one that is “played” by trombone notes. This is what I mean by playing the looper:

- trombone notes will trigger the loop playback from a position determined by the note value

- and/or trombone notes will change the playback rate relative to the note played

- and the amplitude of the loop will follow the trombone performance by using an envelope follower.

I’ll have a second instance of Mobius down the line that will resample the output of the first looper in addition to (or in the absence of) any incoming audio. Effects will be applied after audio in, after the envelope follower and after the resampling looper. I’ve yet to determine exactly what those effects will be, but the success of my vocal set patch leads me to consider a rather minimalist approach.

Speaking of minimalism, I’ve been listening to a lot of Steve Reich these days and I’d like to incorporate some phasing pattern play into my set for my upcoming performance at this year’s Y2K festival. One way to quickly create some interesting phasing composition is to capture a loop to several tracks at once and then trim some of the tracks by a predetermined amount. This can be easily accomplished with a script and I’ve been toying with some ideas along those lines.

Something else to which I’ve given some consideration is the development of midi effects to insert on the midi notes interpreted from the trombone performance. Some midi effects that would be easy to implement:

- midi note delay;

- arpeggiator;

- remapper (to specific key signature);

- transposer.

It will be interesting to see what impact these effects will have on the loop playback of the first looper. Another idea is to remap notes to parameter selection or note velocity to parameter value.

Another significant change is that I’ve acquired hardware to interpret midi notes from trombone performance. I’ve decided to go with the Sonuus I2M instead of my previously discussed approach mainly because I was wasting too much time try to make the ultrasonic sensor work properly. Bottom line, it wasn’t that interesting and I’d rather be playing music. My current plan is to use a contact microphone to feed audio to the I2M and to have a gate on the midi notes it generates in Bidule that I’ll activate with a footswitch.

I’ll also be designing performance software for the iOS as I intend to attach an iPod touch to the trombone to serve as my heads-up display for various system states (updated wirelessly with OSC). I’ll be controlling the iPod with a Bluetooth page-turning footswitch. One pedal on the footswitch will change between different screens and the other pedal will activate an action available on that screen. For instance, on the notation screen, pressing the action pedal will display a new melodic line (either algorithmically generated or randomly chosen from previously composed fragments).

Now all I have to do is build it (and they will come… or more accurately, I will go to them).

Thursday, July 12, 2012

Bring a map

Before beginning this particular mapping, I had a vision I wanted to instantiate. I wanted a system that would allow me to quickly create complex and ever evolving loops using only words and other vocal sounds. I also wanted to limit myself to musique concrete manipulations: Loops, cutting and splicing sounds, delay, pitch shifting and reverb.

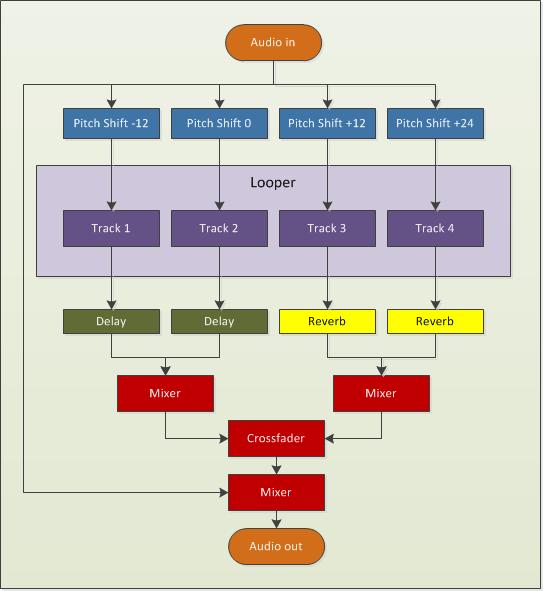

This is the audio flow I came up with:

Incoming audio is sent to outputs and also split to four tracks on a multitrack looper. Before reaching the looper, each signal path goes through a pitch shifting effect. Each track then goes to its own post-looper effect. Tracks 1 and 2 go to a delay while Tracks 3 and 4 go to a reverb. Those two groups of tracks are mixed together and the result is sent to a crossfader than selects between these two sources. The output of the crossfader is mixed with the audio input and sent out.

My looper is Mobius. I could’ve used another looper for this project, but my familiarity with this software and ease of implementation won out over wanting to play with another looper (I’ve had my eye on Augustus for a while).

My pitch shifter is Pitchwheel. It’s a pretty interesting plugin that can be used on its own to create some interest in otherwise static loops. Here, I’m only using it to shift the incoming audio, so it’s a pretty straightforward scenario.

My reverb is Eos by Audio Damage. Do you know of a better sounding reverb that is also CPU friendly? I can’t think of any. My delay in this project is also by Audio Damage. I’m using their Discord3 effect that combines a nice delay with some pitch shifting and filtering with an LFO thrown in to modulate everything. This effect can really make things sound weird, but I’ll be using more subtle patches for this project.

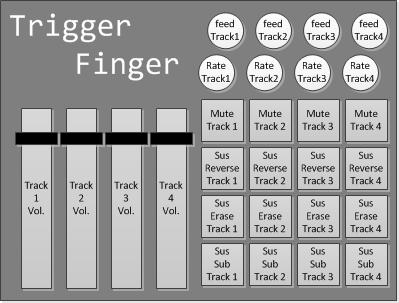

To control all of this, I’ll be using my trusty Trigger Finger to control the looper and my Novation Nocturn to control the effects. Here’s what I decided to do for looper control:

Starting on the left, the faders will control the volume of my tracks in Mobius. The pads and rotary dials on the right are grouped by column and correspond to tracks 1 to 4. Each button perform the same function, but on different tracks. The bottom row of pads call the sustain substitute function on a given track. The row immediately above it does the same thing, but will also turn off the incoming audio, so it will act like my erase button (with secondary feedback determining how much the sounds will be attenuated). The next row up sends the track playing backwards for as long as the button is pressed and the final row of buttons mutes a given track. The first rotary dial controls the playback rate of a given tracks and the top one controls its secondary feedback setting.

To control the effects, this is the mapping I came up with for the Nocturn:

The crossfader is obviously used to control the crossfader between the two track groups. After that, each track has two knobs: one that controls the amount of pitch shift to audio coming in to the track and another that controls the wet/dry parameters of the tracks post-looper effect. The pads on the bottom will select different plugin patches, but the last one on the right is used to reset everything and prepare for performance. Among other things, it will create an empty loop of a specified length in Mobius, which is needed before I can begin using the sustain substitute function. Essentially, I’ll be replacing the silence of the original loop with the incoming audio.

One thing I won’t be doing is tweaking knobs and controlling every single parameter of my plugins. I’ll rely on a few well-chosen and specifically created patches instead. Also, keeping the effects parameters static can be an interesting performance strategy. When I heard Mia Zabelka perform on violin and body sounds last year at the FIMAV, one thing that struck me was that she played her entire set through a delay effect without once modifying any of its parameters. The same delay time and the same feedback throughout. For me, this created a sense of a world in which her sounds existed or a canvas on which her work lived. It’s like she changed a part of the physical reality of the world and it made it easier to be involved in her performance because I could predict what would happen. Just as I can instinctively predict the movement of a bouncing ball in my everyday universe, I became able to predict the movements of sound within the universe she created for us with her performance.

Here's a recording I made tonight by fooling around with this setup:

Tuesday, May 29, 2012

New Album Release: sans jamais ni demain

the longing for repetition

“Happiness is the longing for repetition.”This is a song I made for CT-One Minute. All sounds are derived from a 10-second bass clarinet phrase sample that can be downloaded freely from the Philharmonia Orchestra's website. The sample was played back at various playback rates, forward and backward, through various envelopes using the Samplewiz sampler on my iPod. This performance was recorded in one take with all looping and effects done in samplewiz. No further editing or effects except for copy and pasting the beginning at the end to bring closure to the piece.

---Milan Kundera

I approached samplewiz as a livelooper, since, in "note hold" mode, every note on the keyboard can be seen as a track on a multi-track looper (each with a different playback rate). For this piece, I used the forward and backwards loop settings in the wave window, so things get go sound a bit different. I added some delay and messed with the envelope and it started to sound nice. Once I had a good bed of asynchronous loops, I left "note hold" by tapping rather than swiping the control box (this kept the held notes). I then changed the settings around and played over the loops without "overdubbing".

Samplewiz is quite powerful... You can also change the loop start and end points in between notes to add variety, without affecting the notes that are already being held.

tutus de chemin

This is the soundtrack for a short film I made in a weekend with my wife. I started with a vocal recording of my wife that I sent through Paul's Extreme Sound Stretch. The resulting audio file was played back as a looop in Bidule. I sent the audio to a pitch shifting plug-in (I believe I was using PitchWheel at the time) and then to a midi gate group and finally to the Mobius looper. I performed the sounds two-handed on my Trigger Finger. One hand was controlling a fader that was assigned to pitch shifting and the other was triggering pads to control the midi gate (the note envelope) and various functions in Mobius.

Three of a kind

This piece started out as an assignment for a course in Electroacoustic composition I took at SFU a few years ago. The sound source was a homemade instrument, but everything was mangled and cut-up. This piece features heavy use of the short loop fabrication technique familiar to readers of this blog. I used Acid to assemble everything and add some effect automation throughout the piece.

le train

This is the soundtrack to a short animation film I made last year. I used Soundgrain to isolate parts of the original sound's spectrum and used that software to create loops that I mixed while recording. I think this musical cutup is well-matched with the visual cutup it was meant to accompany.

Game music

This songs was made using my soon to be released iOS app: BreakOSC! This app is a game that sends OSC messages based on in-game events. In this case, when the ball hit blue and green bricks, Bidule triggered four instances of iZotope's Iris. The paddle served as a cross-fader and mixed all those sounds together. The results were sent to a generous amount of reverb courtesy of Audio Damage's Eos.

sans jamais ni demain

Another composition I made for the aforementionned course in electroacoustic composiiton I took at SFU. The only sound source for this piece is a recording of myself reading an old poem I wrote in high-school. The slow moving textures were made by isolating parts of those words, slowing them down and layering them over each other to create very long notes of varying pitch that fade in and out over time. The more rythmic stuff I made using a now familiar technique.

July 8 2011

This piece is a recording of a live (from my basement) performance of what will one day become my meta-trombone. A short loop is created at the top (what is heard twice in the beginning) and then altered in different ways determined by trombone performance.

Twice through the looking glass

This song was also made for CT-One Minute using the exact same sound source as the longing of repetition. This time, however, I used Iris to change the character of the sound and created two different sound patches. I made two recordings with each of these patches by triggering the sounds with my new Pulse controller. My three-month old daughter also took part by adding her own surface hitting contributions, making this our first father-daugther collaboration. Once I had made these two recordings, I brought them in Bidule and placed them into Audio file players. The amplitude of output of each player was controlled via faders on my Trigger Finger and the result was recorded to file.

Wednesday, May 2, 2012

Displaying musical notation in iOS

In case you're wondering, the easiest way I've found to display programmatically generated musical notation on the iPhone is with VexFlow. It's a javascript library, so this means I have to put it in a UIWebView object through an html document that loads all the relevant files. To call the javascript functions, I send a stringByEvaluatingJavaScriptFromString: message to the UIWebView object. It all works very well, so that takes care of the uninteresting part of that project… now I get to learn all I can about algorithmic composition!