Internet woes

The internet has not been all good to

me recently. I often shop online and encourage everyone do so, but

when things go bad, it can (apparently) be a pain to get your money

back.

My first (and second) internet mishap

happened while I was trying to buy an RME Fireface 800 on Ebay. On

two separate occasions, after winning the item and paying through

PayPal, Ebay removed the listing because they suspected it was fraudulent. I got my money back in full in both cases, but

it took a couple weeks for PayPal to go through its formal complaint

process. Weeks during which my money was tied up and I couldn't use it

to buy a Fireface 800. After going through this twice and suffering

through another bad experience (see below), I decided to buy a new

interface from a Canadian store. The transaction went smoothly and I

had the interface within the week.

Internet woes (Part II)

My internet misfortunes continued as a

result of a transaction I initiated in late June when I ordered a

mute from an online retailer. This mute has a microphone pickup

inside it and I intended to use it in my meta-trombone project, so I

was quite keen to get it (and very happy to find a retailer in

North-America). But it wasn’t coming. So I contacted the seller

in July and again in August. By mid-August I wanted my money back.

When I received no reply from the seller, I lodged a complaint with

the Better Business Bureau and informed the seller. No reply from the seller.

Early in September, I reviewed the

seller’s novel approach to customer service on an internet forum

and I informed the seller. The seller promptly went apeshit.

Whereas he could’ve taken this opportunity to renew communications

with me and apologize for missing my previous correspondence, he

called me a liar and publicly insulted me on the forum. Certainly

not the professional behaviour one expects from a seller…

Regardless, the seller agreed to refund me (less restocking fees).

However, the seller also sent an email to the Better Business Bureau

impersonating me in which ‘I’ apologized and withdrew my

complaint. I won’t actually name the seller (due to repeated

threats of legal actions), but I would encourage any reader to

contact me before placing any significant order for musical equipment

from an online retailer located in the north-eastern United States.

Computer woes

One thing you shouldn’t do with your

MacBook Pro is to drop it on the floor. Take my word for it. No

need to try it for yourself. This could’ve been much worse, but I

got off with cosmetics bruises and a dead hard-drive. This is not

too problematic, since I’m rather paranoid about backing-up my

data. Up until now, I’ve been using CrashPlan to backup my main

drive and while I thought it worked quite well, having spent some

time with its restore function has made me yearn for another

approach. I’ve since switched to Carbon Copy Cloner and I heartily

recommend it for all your OS X backup needs. The best thing about it

is that it creates a bootable duplicate of your disk. This means

there is no down time and no need to reinstall software (and search

everywhere for licence information). Also, it doesn’t put your

files in an undecipherable proprietary format that makes it

impossible to locate files without using the software in question (that is so 20th century).

Learning stuff

Having successfully demonstrated

competency in rudimentary university-level mathematics, I’ve started

learning computer programming at l’Université du Québec en Outaouais (in accordance with my previously mentioned epiphany). I’m

currently enrolled in the introductory Java programming course, but

after going through the Stanford introductory course, this one is a

breeze. Feeling inadequately challenged, I also signed-up for a free

online course at Stanford in artificial intelligence. I’m one of

180,000 or so students enrolled. That’s nuts.

I’ve also been teaching myself to

code in Scheme. Not only because it’s the coolest programming

language I’ve ever seen, but mainly because it’s the lingua franca of

livecoding (see Impromptu comments below).

To solidify my hold on both mathematics

and programming, I’ve been using Java, C++ and Scheme to solve

mathematical problems posted on Project Euler. I’ve only solved 19

problems to date and I wish I had more time to spend on these, since it’s great fun, it makes me feel smart and I’m learning stuff. What else

can you ask for?

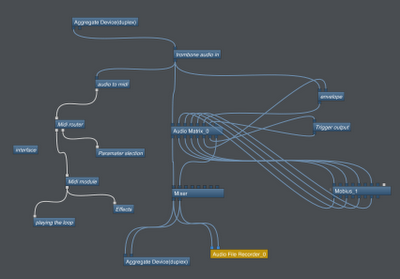

Meta-trombone

Not much development since I last posted about the meta-trombone, but a lot of conceptual work

happening behind the scenes (mostly thinking about effects). I’m

now very interested in Impromptu and its novel blend of coding,

AudioUnits, OSC and MIDI. I’m not sure exactly how it will be

involved in this project, but I know it will be. One thing that

Impromptu allows is to rapidly create AU by compiling using its AU

wrapper. This would make it possible to create some signal

processing wonders of my own and also to implement some of my Bidule

patches as compiled code, thus increasing efficiency. Normally,

there’s little drawback to using Bidule, but my audio to midi patch

is a bit of a drain on the CPU and could be improved by moving it to

Impromptu. This livecoding platform also has some interesting video applications that I’m more than willing to explore.

Camera (film)

I purchased an old canon film camera

and a couple of lens online and found an old light table locally.

I’m amazed at how cheaply this equipment can be had… an

equivalent digital setup would’ve meant an investment of several

thousands of dollars. For a bit over $200, I have seven lens, an SLR

and a light table. Amazing.

My main reason for getting these

technological vestiges of a previous century is to further explore a

collage technique that I learnt from Collin Zipp when I attended a

workshop at Daïmon. During this workshop, I created a little video

from cut-up and scratched film negatives. While the video is ok,

what struck me was that a lot of the individual frames made stunning

images in themselves and I’d like to explore that in the coming

months, perhaps using this technique to create a short comic.